Snowflake Consulting

- Snowflake is built on a proprietary cloud-optimized database architecture.

- It works with the top three public cloud providers - AWS, Azure and GCP with multi-region support.

- Snowflake performs 500M daily queries and has a net revenue retention of 158% with a 121% revenue growth in Q2 2020.(1)

- Cazton has been one of the early adopters of Snowflake and have been using it to build secure and scalable data workloads for performing data analytics. We have helped clients save millions of dollars by providing world-class high quality solutions and expedited delivery.

As the demand for cloud computing continues to grow, many enterprises and mid-to-small size organizations continue to embrace services provided by the public and private cloud platforms. One such platform that offers the flexibility of big data platforms and the elasticity of the cloud combined with the power of data warehousing is Snowflake.

Snowflake is an analytics data warehouse provided as Software-as-a-Service (Saas) that runs entirely on cloud infrastructure. It allows you to store data in a single solution and powers a wide variety of workloads parallely including data warehouse, data engineering, data lake, data science and data exchange. Snowflake does not require any additional software configurations to manage your data. Simply put, you can just upload your structured and semi-structured data and start querying it using ANSI standard SQL queries. All the configuration, management and maintenance of the underlying infrastructure is handled by Snowflake.

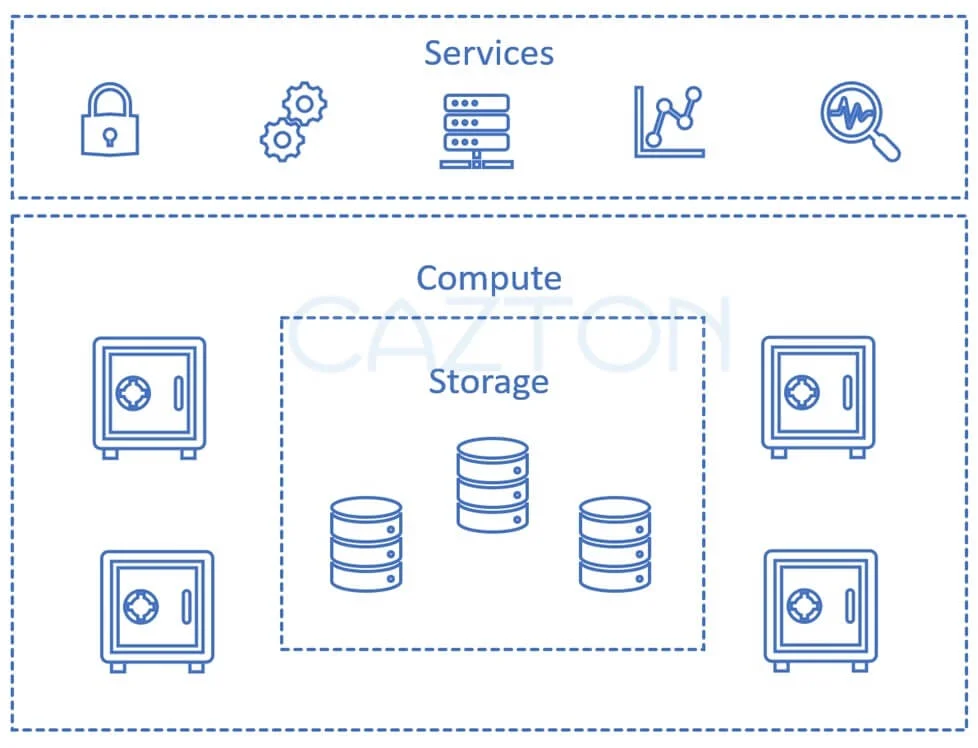

Unlike other products in the same space that use Hadoop and other big data technologies for data storage and processing, Snowflake utilizes a new SQL database design with cloud-optimized architecture. Snowflake's multi-cluster shared data architecture consists of three separate layers: service, compute and storage. The compute and storage layers can be scaled independently while redundancy is built in. Snowflake offers a pay-as-you-go pricing model where you pay only for the compute and storage resources as per your usage. You get two flexible options: Pay as per your usage with no long term commitment OR Pay as per your usage by buying pre-purchased capacity options.

As mentioned above, Snowflake allows you to execute ANSI standard SQL queries against your structured and semi-structured data. Furthermore, it also supports materialized views and ACID transactions. Snowflake has a huge ecosystem with support for a wide range of industry-leading tools and technologies. It offers a variety of connectors, drivers, programming languages and utilities that enables you to perform data integration, business intelligence, machine learning, data science and security and governance of your data.

Have you worked with multi-billion-dollar software development, consulting and recruiting companies? If yes, we provide more quality services at a much better price point. Our team of expert cloud specialists, data scientists, data engineers, data analysts, architects and consultants have worked with industries involving tech, finance, real estate, healthcare, gaming, logistics, energy, fitness, media and entertainment sectors. Our services range from creating a proof of concept to developing an enterprise data warehouse to customized Snowflake training programs. We will help you to utilize Snowflake's powerful cloud based warehouse for all of your data needs.

Top Features

- Zero management: Snowflake offers an easy to use interface that allows creation of data stores and data warehouses. It is designed to scale and perform without indexing, performance tuning, partitioning or physical storage design. It enables you to focus on your data analytics while managing user authentication, data protection, resource management, configuration and high availability.

- Multi-cloud support: It primarily works with the top three public cloud providers - AWS, Azure and GCP with multi-region support. Snowflake does not offer any on-premises solution. However if you have data stored on-premises, you can use an existing tool, connector or driver to connect and migrate your data into Snowflake.

- Automatic scaling: Snowflake's enterprise edition offers multi-cluster virtual warehouses having auto scaling capabilities which can dynamically scale up and down with the data queries. It allows the user to use the same underlying storage while running independently on each cluster.

- Security: It provides best in class security features for its users and data. It supports multi-factor authentication (MFA), OAuth, Single Sign-on (SSO) for user authentication. Furthermore, for data security, it offers automatic AES-256 encryption, end-to-end encryption for data in transit and at rest, periodic rekeying of encrypted data, and dynamic data masking. There is also a third party certification and validation for security standards like HIPAA, PCI-DSS, ISO/IEC 27001 and others.

- Data diversity: Snowflake supports both structured and semi-structured data. You can perform bulk data ingestion into Snowflake from your local file system, Amazon S3, Google Cloud Storage and Azure BLOB storage. Using Snowpipe, which is a continuous data ingestion service you can upload data in micro-batches as soon as it is available. Snowflake supports a variety of file formats including delimited files (CSV, TSV, etc.), JSON, Avro, ORC, Parquet and XML.

- Easy to use: Snowflake offers a web as well as a command line interface (CLI) to manage all features of Snowflake. It also offers connectors and drivers for Python, Spark, Kafka, GoLang, Node.js, .NET, JDBC and ODBC with multi-platform (Windows, Linux and Mac) support.

- Pay as you go: Snowflake's auto-suspend (after like ten minutes) and auto-resume (as the query comes in) features save a lot of Snowflake credits when the compute resources are not in use. Each time a warehouse is resumed or increased in size, initially your account is billed for one minute of usage. After the first minute, the billing is calculated per second of compute resource.

Snowflake has a lot to offer and listing every feature is beyond the scope of this article. At Cazton, our experts have helped many clients with Snowflake consulting and customized training services. Feel free to contact us if you wish to learn more about Snowflake and what our experts can do for you.

Snowflake Architecture

It has a hybrid architecture of a traditional shared-disk and shared-nothing architecture while utilizing the best of both worlds. On one side, like the shared-disk architecture, it offers the data management simplicity by using a central data repository for persisted data which is accessible from all compute nodes. On the other side, similar to shared-nothing architecture, it incorporates performance and scalability through MPP (massively parallel processing) compute clusters by processing queries parallely on the data stored in the clusters. This enables fast scalable analytics and transformations.

The multi-cluster shared data architecture consists of three separate layers:

- Storage Layer: The storage layer consists of databases, tables and views. All the data that you ingest into Snowflake is stored in this layer. It uses highly secured cloud storage to maintain data. As soon as the data is loaded, your data is converted into optimized columnar compressed format which is encrypted using AES-256 strong encryption. End-to-end encryption for data is enabled while it is in transit or at rest.

- Compute Layer: In this layer, queries are executed using resources provisioned from the cloud provider. You can create multiple independent compute clusters called Virtual Warehouses, that access the same storage layer. These virtual warehouses can be scaled up or down without any downtime or disruption. You can isolate workload as per your requirement by using separate virtual warehouses for loading data and querying concurrently. Since all virtual warehouses use the same underlying data storage layer, any updates made are immediately available to all other warehouses.

- Services Layer: This layer coordinates and manages the entire system. It authenticates users, manages sessions, secures data and performs query compilation and optimization. It manages the virtual warehouses and coordinates data storage updates and access. It also ensures that once the transaction is completed all virtual warehouses see the same new version of the data with no impact on availability or performance.

Now that we have learned about the different layers in Snowflake's architecture, let's look into the different operations that can be carried out with Snowflake.

- Connecting to Snowflake: Snowflake offers a web-based user interface (UI) and a command-line interface (CLI). These interfaces can be used to manage and use all features of Snowflake. Other than the user interfaces, we can also connect our applications using different drivers and connectors.

- Loading data into Snowflake: Data can be loaded into Snowflake by uploading/ingesting files from different sources. Let's take a quick look at the data loading options.

- Limited data loading: For small amounts of data, Snowflake provides a web UI for handling small files.

- Bulk data loading: This deals with loading large volumes of data into Snowflake.

- SnowSQL: It is a SQL CLI from Snowflake that lets you load structured data from comma delimited csv files or semi-structured data from Json, Avro, Parquet and ORC files.

-

COPY Command: This command enables loading batches of data from files which are already available in the cloud storage. However, for loading files from local machines, we must first copy them onto an internal cloud storage and then load data into the database tables using the COPY command.

While loading data into a table using the COPY command, we can also transform the data. Different transformation options are available including column reordering, column omission, casts, truncating text strings that exceed the target column length and more.

- Third party tools: To connect ETL (extract-transform-load) and BI tools we can also use third party connectors.

- Continuous data loading: This deals with continuously loading small volumes of data referred to as micro-batches into Snowflake and incrementally making them available for analysis. Here we can use Snowpipe for continuous data loading.

-

Loading data from Kafka: We can use Snowflake's Kafka connector to read data from multiple Kafka topics and load them into Snowflake's database tables.

Once data is loaded into Snowflake, it passes through the following process:

- Snowflake breaks tables into multiple smaller partitions called micro-partitions (50 - 500MB of uncompressed data).

- The data is reorganized in each micro-partition to make it columnar, so as to store column values together.

- Each column value is compressed individually.

- A header is added to each micro-partition column containing metadata like offset.

- From this metadata, data queries are read and executed on the columns you want.

- Unloading/Exporting data from Snowflake: Similar to data loading, Snowflake also allows unloading of data from Snowflake into Snowflake Stage, Amazon S3, Google Cloud storage or Microsoft Azure blob storage. The exported data can be in structured or semi-structured file formats. Let's take a quick look at the available options:

- Bulk unloading process: This process is the reverse of loading the data in Snowflake. Here we can use the COPY command to copy data from Snowflake database tables into one or more files in Snowflake stage or external stage. From the Snowflake stage, the files can be downloaded locally and from an external stage it can be downloaded into external storage like Amazon S3 or Azure's BLOB storage.

- Bulk unloading using queries: Here the COPY command is modified to include a SELECT statement instead of a table. The results can then be stored in a file at a specific location specified in the query.

- Bulk unloading into single or multiple files: The COPY command provides an option to export data into single or multiple files, single file being the default option. While exporting the data into multiple files, it is recommended to specify the max_file_size option in the COPY command to define the maximum size of each file created.

- Querying data in Snowflake: Snowflake supports ANSI standard SQL that enables users to execute both DDL and DML queries. Users can write common table expressions (CTE), subqueries, window functions, sequences and more to query hierarchical and semi-structured data. Let's take a quick look at the query lifecycle.

- First step is to connect to Snowflake through one of its supported clients and initiate a session.

- Once the session is initiated, users can specify the virtual warehouse (VW) to use in the session and start executing queries.

- When a query is executed, the service layer first authorizes it.

- The query is then compiled and an optimized query plan is created.

- The service layer sends the query execution instructions to the VW.

- The VW allocates resources, requests any data from the storage layer and executes the query.

- Finally the result is returned back to you.

Security in Snowflake

Snowflake ensures the best security practices for industry by providing comprehensive data protection and compliance. It already supports multiple compliances including SOC 1 Type II, SOC 2 Type II, PCI-DSS, HIPAA, ISO/IEC 27001 and FedRamp Moderate. Under the Federal Risk & Authorization Management Program (FedRAMP) it is authorized to operate at moderate level by the state and federal governments.

Snowflake allows you to manage access to data sets, monitor usage and access and control the workflow while relying on its built-in security features. It performs dynamic data masking and end-to end data encryption. It's network policies can control website access through IP whitelisting and blacklisting. Snowflake's business critical edition or higher supports private link communication through a virtual private network (VPN). Security is enhanced with account authentication through the multi-factor authentication, OAuth and single sign-on features. A hybrid model of discretionary access control (DAC) and role-based access control (RBAC) provides fine-grained access to all objects like tables, databases, warehouses. Application auditing helps in logging all actions.

There's a lot more when it comes to security features provided by Snowflake. Organizations get the advantage of relying on a strong security infrastructure while getting fine-grained control over their data. At Cazton, our experts have been using the Snowflake data platform service for many years now. Our experts can consult with security best practices and help you with a solution which is secure and scalable. Contact us to learn more about Snowflake security and what our experts can do for you.

How Cazton Can Help You With Snowflake

Cazton cloud services experts have many years of experience in Snowflake services and have worked with industries involving real estate, healthcare, gaming, logistics, energy, fitness, media and entertainment sectors. We have been one of the early adopters of Snowflake and have been using it to build secure and scalable data workloads for performing data analytics. We have helped clients save millions of dollars by providing world-class high quality solutions and expedited delivery.

We offer the following services at cost-effective rates:

- Snowflake consulting, recruiting and customized training.

- Analyze business requirements and build powerful cloud based warehouses.

- Migrate data warehouse to Snowflake on all major cloud platforms Azure, AWS and GCP.

- Create custom analytics and BI integrations with Snowflake.

- Consult you with cloud and security best practices.

- Consult you with design and architectural best practices.

- Migrate your on-premises data to Snowflake.

- Help you develop high performance, scalable and enterprise-level data driven applications using connectors, drivers and third party tools and technologies.

- Integrate Snowflake solutions for big data analytics.

- Integrate Snowflake solutions for machine learning and data science.

- Customized Snowflake training from beginner to advanced level.

- Industry leading data migration solutions.

- Disaster management and data recovery.

- Audit and assess your requirements to design a strong and cost-efficient data analytics strategy.

References

- https://sec.report/Document/0001628280-20-013010/

Cazton is composed of technical professionals with expertise gained all over the world and in all fields of the tech industry and we put this expertise to work for you. We serve all industries, including banking, finance, legal services, life sciences & healthcare, technology, media, and the public sector. Check out some of our services:

- Artificial Intelligence

- Big Data

- Web Development

- Mobile Development

- Desktop Development

- API Development

- Database Development

- Cloud

- DevOps

- Enterprise Search

- Blockchain

- Enterprise Architecture

Cazton has expanded into a global company, servicing clients not only across the United States, but in Oslo, Norway; Stockholm, Sweden; London, England; Berlin, Germany; Frankfurt, Germany; Paris, France; Amsterdam, Netherlands; Brussels, Belgium; Rome, Italy; Sydney, Melbourne, Australia; Quebec City, Toronto Vancouver, Montreal, Ottawa, Calgary, Edmonton, Victoria, and Winnipeg as well. In the United States, we provide our consulting and training services across various cities like Austin, Dallas, Houston, New York, New Jersey, Irvine, Los Angeles, Denver, Boulder, Charlotte, Atlanta, Orlando, Miami, San Antonio, San Diego, San Francisco, San Jose, Stamford and others. Contact us today to learn more about what our experts can do for you.