OpenAI Consulting

- AI for all, powered by OpenAI: OpenAI’s state-of-the-art reasoning models - like o1 and o3 - are redefining how AI can empower everyday employees with the expertise and precision of PhD-level knowledge.

- AI agents: Employ an army of AI agents fully capable of helping make your team more productive. We can help you create an AI system fully powered by the same APIs and technologies leveraged by ChatGPT.

- Marketing without the madness: Our AI marketing platform understands your brand voice and audience like a seasoned strategist, eliminating redundant campaign tasks with 100% automation so you can focus solely on your creative vision while flawlessly executing personalized campaigns that drive company growth.

- Code without the chaos: Working alongside your engineering teams like a seasoned developer, our AI AutoPilot eliminates repetitive coding tasks and delivers 100% automation solutions, allowing you to focus on innovation while achieving 75% faster code delivery, 95% test coverage, and 90% smoother deployments.

- Browse without the burden: Acting as your most reliable team member with natural intuition, our AI agent seamlessly handles tedious web tasks through 100% automation, letting you concentrate on strategic decisions while gathering insights and supporting customers effortlessly.

- Data without the drama: Our SQL AI agent anticipates your data needs and removes complex technical barriers, delivering 100% automation that allows you to focus on strategic insights while getting complex queries and reports with the precision of your most skilled analyst.

- Secret sauce behind ChatGPT: Apart from OpenAI's models, ChatGPT leverages Azure AI Search and Azure Cosmos DB to make cutting-edge AI accessible to all. With Microsoft AI MVPs, Azure Cosmos DB Cosmonauts, and Azure MVPs on our team, we are uniquely positioned to help organizations succeed.

- Text, images, audio, and video AI: OpenAI's models are multi-modal and understand multiple types of input, such as text, images, audio, and video. They can read, understand, and even talk like a human.

- Tailored AI solutions for your business: We create AI solutions specifically designed for your business, finely tuned to your unique needs. Our approach enhances decision-making, automates workflows, and improves customer engagement, all based on your goals and use cases.

- Team of experts: Our team is composed of PhD- and Master's-level experts in data science and machine learning, award-winning Microsoft AI MVPs, open-source contributors, and seasoned industry professionals with years of hands-on experience.

- Microsoft and Cazton: We work closely with OpenAI, Azure OpenAI, and many other Microsoft teams. We have been working on LLMs since 2020, a couple of years before ChatGPT was launched. We are grateful to have early access to these models from Microsoft, Google, and open-source vendors.

- Top clients: We help Fortune 500, large, mid-size, and startup companies with Big Data and AI development, deployment (MLOps), consulting, recruiting services, and hands-on training. Our clients include Microsoft, Google, Broadcom, Thomson Reuters, Bank of America, Macquarie, Dell, and more.

OpenAI is revolutionizing the way we work and learn. With its powerful API and developer tools, businesses are integrating artificial intelligence into their workflows, transforming operations across industries. Where people once turned to search engines for information, many now rely on ChatGPT - OpenAI's flagship tool - for instant, personalized responses. A manager inputs a request into ChatGPT and receives a polished PowerPoint draft in seconds. A CEO uses OpenAI's tools to get key metrics, strategic recommendations, and a well-structured agenda in moments. With OpenAI, work isn’t just faster- it’s smarter, transforming how enterprises operate and enabling them to achieve more in less time.

OpenAI APIs For You

OpenAI offers a variety of APIs that developers can use to build AI-powered applications. Here are some of the key APIs:

- Chat Completions API: This API allows developers to build chat-based applications where the model can generate responses based on user input.

- Realtime API: Designed for low-latency, real-time interactions, this API which supports both voice and text, is ideal for applications requiring immediate responses.

- Assistants API: This API enables developers to create AI assistants that can perform complex, multi-step tasks by leveraging models, tools, and knowledge.

- Batch API: This API is used for running asynchronous workloads, allowing developers to process large volumes of data efficiently.

- Code Interpreter: This API allows models to run code iteratively, solving challenging code and math problems, and generating charts.

- Function Calling: This API instructs the model to interact with your codebase and APIs using custom functions.

- Vision API: This API enables models to understand and answer questions about images using vision capabilities.

- Streaming API: This API displays model outputs in real-time as they are generated.

- Fine-tuning API: This API allows developers to customize a model’s existing knowledge and behavior for specific tasks using text and images.

- Structured Output: This API guarantees JSON outputs from the model, making it easier to integrate with other systems.

We have used these technologies extensively at many of our clients, and we appreciate the learning our team has had building real world applications, orchestrating multiple AI agents and running them in production.

Challenges with OpenAI Projects

Building effective AI solutions is not just about using cutting-edge tools - it's about overcoming significant real-world challenges. According to Fortune magazine, the failure rate of AI projects across industries is staggeringly high, ranging from 83% to 92%. Many businesses struggle to translate AI's promise into tangible results, often due to a lack of expertise, poor alignment with business needs, or the inability to address critical challenges like scalability, accuracy, and user experience. However, at Cazton, we have consistently bucked this trend, achieving a 100% success rate on AI projects fully managed and delivered by our AI team.

One key hurdle in AI implementation is ensuring systems mitigate hallucinations and perform reliably in diverse, unpredictable environments. For example, an AI solution designed to forecast retail demand may falter when faced with sudden disruptions like supply chain issues or global crises. Adapting to such challenges requires fine-tuning algorithms, retraining models, and rigorous testing - areas where Cazton excels. We've fine-tuned more than 100 AI models and rigorously tested over 200 to ensure they deliver accurate, consistent, and actionable results.

Another significant challenge is balancing AI's transformative potential with the responsibility of mitigating risks. Generative AI, for instance, can produce convincing text, images, and videos but is prone to biases and errors. Imagine an AI providing misleading financial insights or inaccurate medical recommendations - such mistakes can lead to costly or even dangerous outcomes. That's why we emphasize robust monitoring frameworks, ethical alignment, and comprehensive testing to ensure our AI solutions are reliable and trustworthy.

At Cazton, we understand what it takes to deliver AI systems that meet real-world needs, including scalability, performance, user experience, and accuracy. Our team is composed of PhD and Master's-level experts in data science and machine learning, award-winning Microsoft AI MVPs, open-source contributors, and seasoned professionals with years of hands-on experience. Leveraging platforms like OpenAI, we help businesses unlock the potential of advanced language models, vision systems, video generation, and voice-based AI to streamline operations and enhance user experiences. Whether you're a Fortune 500 company or a fast-growing startup, we focus on making AI accessible, scalable, and impactful, allowing you to prioritize innovation while we handle the complexities behind the scenes.

OpenAI Models

OpenAI's latest advancements bring smarter, faster, and more versatile AI models, transforming how businesses work and innovate.

- o3 and o3-mini: The o3 and o3-mini models bring a new level of excellence in AI reasoning, particularly in coding, mathematics, and complex problem-solving. With reinforcement learning, o3 uses a 'private chain of thought' to improve its reasoning, enabling it to perform a series of steps for greater accuracy. Additionally, o3 has achieved notable accuracy on the ARC-AGI benchmark. Complementing these advancements, o3-mini offers similar capabilities at a more affordable price, making these models versatile and efficient for real-world applications.

- o1 and o1-mini: These models are designed for advanced reasoning tasks, excelling in areas like math, coding, and science. The o1 model focuses on deep reasoning and multi-domain problem-solving, while the o1-mini is optimized for efficiency and cost-effectiveness, making it ideal for specialized, smaller-scale applications. The two models cater to different needs: o1 for comprehensive cognitive tasks and o1-mini for lightweight reasoning tasks.

- GPT-4o and GPT-4o-mini: As optimized versions of GPT-4, these models provide exceptional performance with enhanced processing speed, superior context awareness, and seamless multimodal integration. These models efficiently handle text, images, and audio, making them versatile tools for a variety of everyday use cases. While both models offer outstanding value, GPT-4o-mini is designed to be more cost-effective and compact, making it ideal for applications requiring high efficiency at lower operational costs. On the other hand, GPT-4o offers slightly more advanced capabilities, making it suitable for tasks that demand higher computational power. Together, these models provide flexible and reliable solutions tailored to different needs, ensuring quality and efficiency across various applications.

- Sora: Sora is a groundbreaking text-to-video AI model that brings imagination to life by generating realistic and imaginative scenes from text instructions. Released in February 2024, Sora can create videos up to a minute long while maintaining high visual quality and adherence to the user's prompt. It simulates the physical world in motion, making it a powerful tool for filmmakers, educators, and marketers. Sora also includes features like Remix, which allows users to replace, remove, or reimagine elements in their videos, and Storyboard, which helps organize and edit unique video sequences.

- DALL·E: This model stands out with its remarkable ability to generate high-quality images from text prompts, surpassing its previous versions. It captures intricate details and provides a more faithful representation of the given descriptions, making it an invaluable tool for creative projects. Enhanced with native integration with ChatGPT, DALL-E allows users to effortlessly refine and iterate on their prompts, resulting in precise and stunning visuals. Moreover, it incorporates improved safety features to minimize the risk of generating inappropriate content, ensuring a more secure user experience.

We help customers integrate these models and fully customize the experience for them. Additionally, we help create enterprise grade AI systems that increase productivity per employee multifold and increase their revenues while decreasing costs.

Voice AI: AI That Really Speaks Like a Human

Did you know we’re the first company to create an iOS app powered by OpenAI’s native voice API, also known as the GPT-4 Real-time API? By leveraging Voice AI, we’ve built applications that are truly hands-free and eyes-free. This enables seamless interactions and opens up exciting possibilities for both accessibility and productivity. It's particularly beneficial for individuals with disabilities, offering alternative ways to engage with devices and services.

Consider a healthcare application where patients can schedule check-ups. For instance, a patient could say, "Book a follow-up appointment with Dr. Smith next Tuesday," and the system would check availability, confirm the time slot, and notify the patient - all in real time. By leveraging the Chat Completions API, this interaction becomes even more seamless and personalized, enhancing user experience and efficiency.

- Engaging spoken summaries: Once the appointment is booked, the system could use the Chat Completions API to provide a spoken summary of the booking details, such as "Your appointment with Dr. Smith has been scheduled for Tuesday at 3 PM." This ensures the user has immediate and clear confirmation. It can also summarize instructions or medications after a consultation, making healthcare interactions more accessible and user-friendly.

In the workplace, Voice AI can revolutionize productivity. Imagine a project manager coordinating tasks across teams. By simply saying, "Send a reminder to the design team about the product demo on Friday," the system can draft and send a notification instantly. Additionally, Voice AI can summarize meeting notes, create follow-up tasks, and generate reports based on voice commands. Here's how the APIs enhance these workplace scenarios:

- Sentiment analysis from audio: During a meeting, the Realtime API could analyze the tone of participants to gauge engagement or detect frustration. If someone expresses concern or dissatisfaction, the system can flag it for the manager, enabling proactive action. This enhances workplace communication by helping teams better understand emotional nuances during discussions or feedback sessions.

- Asynchronous speech-in, speech-out interactions: If a manager asks, "What’s the status of the product demo?", during a busy meeting, the Chat Completions API can process the input, retrieve the relevant information, and provide a spoken update later, allowing the manager to stay focused on other priorities. This functionality is perfect for teams working across time zones or in hands-free environments, ensuring that updates are delivered efficiently and at the right moment.

To explore the endless possibilities Voice AI offers and how it can transform your business, check out our Voice AI offering.

Assistants: Doorway to Smart Solutions

Assistants or Agents are designed to interact with users in a natural and intuitive manner. Imagine an assistant as a digital entity with a brain, memory, speech and listening capabilities, and a set of tools at its disposal. They leverage complex machine learning algorithms to understand and respond to a wide variety of queries, making them invaluable in numerous applications, such as personal assistance, customer service, and even healthcare. For a comprehensive understanding of agents, refer to the detailed explanation provided here.

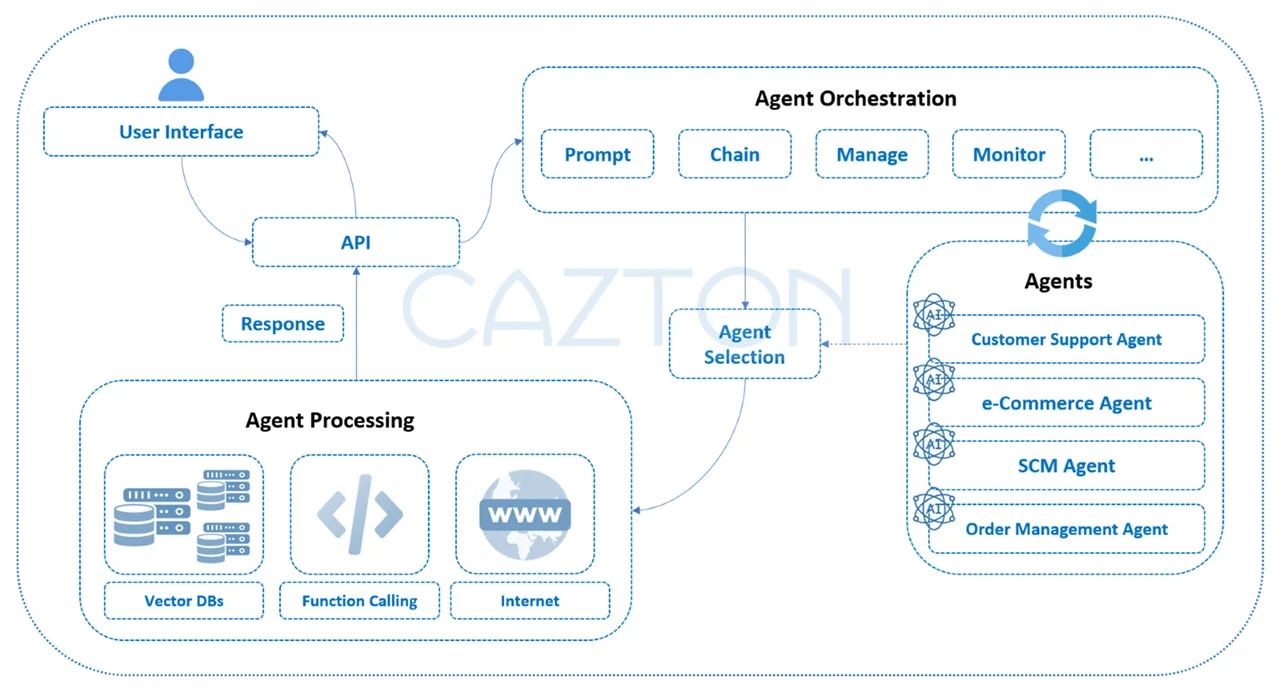

Multi-Agent Orchestration Architecture

The Assistants API by OpenAI empowers developers to build customized AI assistants within their own applications. This API allows for the integration of essential tools and capabilities, making the assistants versatile and powerful. For instance, the API supports three main tools: the Code Interpreter, File Search, and Function Calling. These tools enable assistants to interpret and execute code, search for and manage files, and perform specific functions based on user commands.

Here’s a simplified breakdown of how these assistant's work:

- Personalization and tuning: Developers can give assistants specific instructions to fine-tune their personality and capabilities. This means an assistant can be tailored to behave in a particular way, making interactions more engaging and relevant to users.

- Tool integration: Assistants can utilize multiple tools simultaneously. For example, an assistant could use the Code Interpreter to help developers debug code while also accessing the File Search tool to locate relevant documents. This multi-tool functionality enhances the assistant's ability to handle complex tasks.

- Persistent threads: One of the unique features of the Assistants API is the ability to maintain persistent threads. These threads store conversation history, allowing for continuous and context-aware interactions. This means the assistant can remember previous conversations and provide more coherent and contextually relevant responses over time.

An example of the application of this API could be in a customer support scenario. Consider a company deploying an AI assistant on its website. The assistant can answer customer queries, help with troubleshooting, and even process orders. For instance, if a user is planning a vacation, the assistant can help them search for hotels, check for available reservations, and book a room. The assistant can also provide information about the user's past orders or reservations, ensuring a seamless experience. This makes the interaction smooth and efficient, enhancing user satisfaction and trust in the service.

Cazton CEO Chander Dhall presented "AI That Talks Like Us: Fabric Makes Chatting with Data Effortless" at Microsoft Fabric Conf 2025, where he demonstrated how Microsoft Fabric, Azure OpenAI, and Azure Cosmos DB work together to create human-like conversational AI that interacts with data in real-time. His session showcased advanced voice-based Retrieval-Augmented Generation (RAG) technology with multilingual capabilities in Japanese, Mandarin, Hindi, French, and Arabic, along with practical implementations using DiskANN, full text search, and hybrid search techniques. The presentation highlighted cutting-edge approaches to making complex data accessible through natural, conversational interactions that break language barriers.

Assistants Use Cases

There are several types of AI assistants, each designed for specific purposes and functionalities:

- Personal assistants: These are designed to help users manage daily tasks. They can set reminders, answer questions, play music, and control smart home devices.

- Customer service assistants: These are used by businesses to handle customer inquiries and support. They can answer frequently asked questions, process orders, and provide product information.

- Virtual health assistants: These AI assistants provide health-related support, such as answering medical questions, reminding patients to take their medications, and even diagnosing minor ailments.

- Educational assistants: These are designed to help students learn. They can answer questions, provide explanations, and offer practice exercises.

- Business assistants: These are used to support various business functions, such as scheduling meetings, managing emails, and analyzing data.

- Specialized assistants: These are tailored for specific industries or tasks. For instance, there are AI assistants for legal research, financial planning, and even creative tasks like generating music or art.

Each type of assistant uses the power of AI to make users' lives easier and more efficient, tailored to their unique needs and contexts. Since 2012, Cazton has been at the forefront of the AI revolution, building AI-powered autonomous agents. By 2020, we pioneered the use of generative AI, developing agents that not only perform tasks but also learn and adapt to new challenges, ensuring your business stays ahead of the competition. These agents harness the power of both LLMs and LVMs to assist human co-workers.

Our AI agents are equipped with the ability to see, hear, and talk. By integrating LVMs, they can analyze visual data, recognize patterns, and provide insights that drive decision-making. Advanced speech recognition allows them to understand and process spoken language, enabling seamless voice-driven interactions. Utilizing LLMs, our agents can engage in natural, human-like conversations, providing support, answering queries, and enhancing customer experiences.

Azure OpenAI

Azure OpenAI combines OpenAI's groundbreaking models with the scalability, reliability, and security of Microsoft's Azure platform. Businesses can deploy AI solutions on a global scale, leveraging Azure's vast infrastructure to meet growing demands without compromising performance. Whether you're serving millions of users or running intensive AI workloads, Azure's elastic cloud environment ensures seamless scaling to handle peaks in usage, keeping your operations smooth and efficient.

Security and compliance are at the core of Azure OpenAI. With enterprise-grade protections, data is safeguarded through advanced encryption, secure access controls, and compliance with key standards like GDPR, HIPAA, and ISO/IEC certifications. For industries like finance and healthcare, which demand stringent regulatory adherence, Azure OpenAI provides the tools to integrate AI without risking sensitive information. For example, a bank can use Azure OpenAI to build a secure conversational assistant that provides customers with real-time updates on transactions, account balances, and personalized financial advice - all while maintaining compliance with financial regulations.

Azure OpenAI also streamlines AI integration into existing workflows and applications through native Azure tools like Cognitive Services and Azure DevOps. Teams can take advantage of pre-built APIs, seamless integration with Power BI, and robust support for data analysis and visualization. This allows businesses to deploy end-to-end AI solutions, from training custom models to delivering actionable insights directly to decision-makers.

We craft fully customized AI solutions powered by Azure OpenAI, seamlessly aligned with your business domain to optimize decision-making, automate workflows, and enhance customer engagement. From building intelligent chatbots that provide personalized, real-time support to fine-tuning AI models with your proprietary data for unmatched precision, we ensure every solution is customized to your unique needs. By integrating multiple AI agents into a unified, secure enterprise AI ecosystem, we automate deployment, monitoring, and evaluation processes while enforcing strong guardrails for reliability and compliance. With Azure OpenAI's scalability and robust security, our solutions drive productivity, innovation, and measurable increases in revenue and profitability.

Proven Success Strategies for Enterprise

While OpenAI is good and will get better with time, Cazton can help you with a comprehensive AI strategy that is the best of all worlds: OpenAI technologies, open-source alternatives and proprietary technologies from major tech companies. We have listed some client concerns below and the solutions:

- ChatGPT like business bots: Imagine having an OpenAI powered chat bot for every single team in your company: sales, marketing, HR, legal, tech team etc. that helps you accentuate your productivity. The bot provides information, simplifies concepts, brings everyone up to speed, removes bottlenecks and roadblocks, provides automated documentation and enhances team collaboration. Guess what! This is all possible on your data while we protect your data privacy. Yes, no other party, including Microsoft or OpenAI will have access to your data.

- Cost reduction: OpenAI solution is based on a pay-as-you-go model? However, some clients who would want to use the Generative AI solutions extensively, may want to save that cost. There would be no ongoing costs like per-image cost. Superb! That means the cost of running the model is effectively zero. (1)

- Increased accuracy, precision and recall: OpenAI models and other AI models are not 100% accurate. Our team helps you create solutions that have higher relevancy and accuracy. Contact Cazton team to learn strategies to create high quality AI solutions while lowering ongoing costs. We can help with customized models that can alleviate lack of accuracy, and this custom model can be trained on clients' business domain. Two popular solutions are:

- OpenAI model extension: Creating a customized model on top of OpenAI model.

- Open-source model extension: Creating a customized model on top of a pre-trained open-source model.

- Offline access: Some clients prefer not to make a call to an external API (like OpenAI). Can we help you with your own model that could be used offline? Absolutely! We can help create a solution based on open-source pre-trained models that can be used offline in a multitude of devices including all major operating systems, Docker, IoT devices etc.

Good news: The Cazton team is well-aware of the limitations, pitfalls, and threats associated with AI solutions, such as hallucinations, accuracy, bias, and security concerns. We constantly strive for higher accuracy, precision, and recall by combining traditional information retrieval techniques with AI and deterministic programming to provide hybrid solutions that deliver enhanced performance. By proactively addressing these challenges and developing innovative solutions, we ensure our customized AI-Powered business solutions are reliable, ethical, and secure, fostering trust among users and stakeholders across various industries.

How Cazton Can Help You With OpenAI

Cazton is a team of experts committed to helping businesses build custom, accurate, and secure AI solutions using OpenAI, Azure OpenAI and open-source technologies. Our team is composed of PhD and Master's-level experts in data science and machine learning, award-winning Microsoft AI MVPs, open-source contributors, and seasoned industry professionals with years of hands-on experience.

We tackle common challenges such as hallucinations, low accuracy, and suboptimal precision and recall by implementing advanced fine-tuning strategies, rigorous data preprocessing pipelines, and leveraging our deep domain expertise. Our solutions go beyond surface-level fixes, addressing issues like context retention in lengthy conversations, optimizing performance for domain-specific queries, and enhancing multilingual comprehension.

By incorporating techniques such as fine-tuning, Retrieval-Augmented Generation (RAG), RAFT, embeddings-based search, and prompt engineering - (the art of the perfect prompt), we ensure our models provide not just accurate responses, but also relevant, context-aware, and actionable insights customized to each client’s unique needs.

You can trust that your data remains secure, as we prioritize stringent security measures to restrict access solely to authorized personnel. Our primary goal is to provide you with the necessary information and professional guidance to make informed decisions about OpenAI and Azure OpenAI solutions. We believe in empowering our clients with knowledge, rather than pushing sales pitches, so you can confidently choose the best AI partner for your business - Cazton!

We can help you with the full development life cycle of your products, from initial consulting to development, testing, automation, deployment, and scale in an on-premises, multi-cloud, or hybrid environment.

- Fully austomized, fine Tuned, fully automated AI agents and aolutions: We craft fully tailored AI solutions, perfectly aligned with your business domain, to optimize decision-making, automate workflows, and enhance customer engagement. From developing intelligent chatbots that seamlessly interacts with customers to fine-tuning AI with your proprietary data, we build everything - from custom applications to advanced AI agents. We integrate and orchestrate multiple AI agents, creating a sophisticated enterprise AI system that automates deployment, evaluation, and security, all while adding robust guardrails. This approach boosts productivity and drives significant increases in revenue and profits.

- Comprehensive development lifecycle: We offer comprehensive assistance throughout the entire development lifecycle of your products, encompassing various stages from initial consulting to development, testing, automation, deployment, and scalability in on-premises, multi-cloud, or hybrid environments. Our team is adept at providing professional solutions to meet your specific needs.

- Technology stack: We can help create top AI solutions with incredible user experience. We work with the right AI stack using top technologies, frameworks, and libraries that suit the talent pool of your organization. This includes OpenAI, Azure OpenAI, Azure Cosmos DB, MongoDB, Azure AI Search, Spark, Kafka, Hadoop, Redis, Ignite, Semantic Kernel, LangChain, LlamaIndex, PyTorch, TensorFlow, Stable Diffusion, Keras, Scikit-learn, Microsoft Cognitive Toolkit, PineCone, Qdrant, FAISS, ChromaDB, Weaviate, Theano, Caffe, Torch, and/or others.

- Develop models, optimize them for production, deploy and scale them.

- Best practices: Introduce best practices into the DNA of your team by delivering top quality machine learning (ML) and deep learning (DL) models and then training your team.

- Scalability and performance: We have scalability and performance experts that can help scale legacy applications and improve performance multi-fold.

With Cazton by your side, you're not just keeping up with the future - you're leading it. Let's build something amazing together. Ready to take your career to a completely new level? Contact us today.

Reference:

- Requires Master Services Agreement and Statement of Work.

Cazton is composed of technical professionals with expertise gained all over the world and in all fields of the tech industry and we put this expertise to work for you. We serve all industries, including banking, finance, legal services, life sciences & healthcare, technology, media, and the public sector. Check out some of our services:

- Artificial Intelligence

- Big Data

- Web Development

- Mobile Development

- Desktop Development

- API Development

- Database Development

- Cloud

- DevOps

- Enterprise Search

- Blockchain

- Enterprise Architecture

Cazton has expanded into a global company, servicing clients not only across the United States, but in Oslo, Norway; Stockholm, Sweden; London, England; Berlin, Germany; Frankfurt, Germany; Paris, France; Amsterdam, Netherlands; Brussels, Belgium; Rome, Italy; Sydney, Melbourne, Australia; Quebec City, Toronto Vancouver, Montreal, Ottawa, Calgary, Edmonton, Victoria, and Winnipeg as well. In the United States, we provide our consulting and training services across various cities like Austin, Dallas, Houston, New York, New Jersey, Irvine, Los Angeles, Denver, Boulder, Charlotte, Atlanta, Orlando, Miami, San Antonio, San Diego, San Francisco, San Jose, Stamford and others. Contact us today to learn more about what our experts can do for you.